Case Study: Tracking large collectives of unmarked animals

idtracker.ai is a tool designed to identify and track the position of every individual in a collective regardless of the species or the size of the animals

Animals are studied for multiple reasons whether it’s for medical research or to get a deeper understanding of their behaviour. Zebrafish, in particular, are commonly studied because of their availability, but also because they share 70% of genes with humans, and have a similar genetic structure to us. 84% of genes known to be associated with a human disease have a zebrafish counterpart.

But how do you track or study large communities of smaller animals?

Machine vision based idtracker.ai

Created by the team at the Collective Behaviour Lab at Champalimaud Research in Lisbon Portugal, idtracker.ai is a tool designed to identify and track the position of every individual in a collective regardless of the species or the size of the animals. In this case idtracker.ai can track a large animal collective of up to 100 juvenile zebrafish with 99.9% accuracy. The system can also track single individuals. To do so the system uses two deep networks. One that detects when animals touch or cross, and a second one to identify each individual.

The Set-up

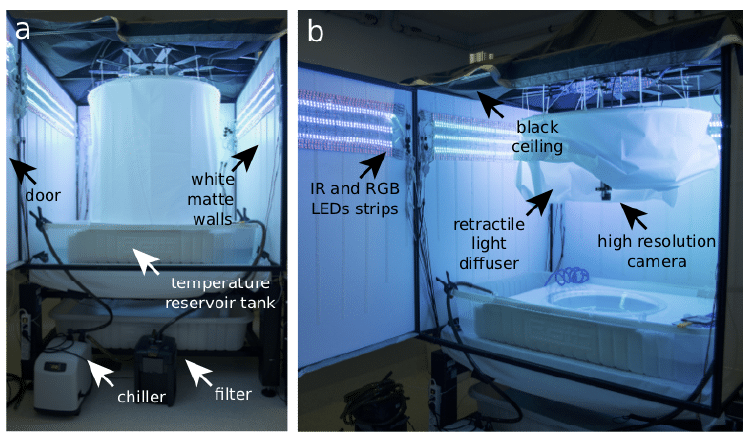

The system consists of a 70 cm diameter custom-made tank with a water recirculating system. The tank is placed inside a box built with matte white acrylic walls with a door to allow for easy access.

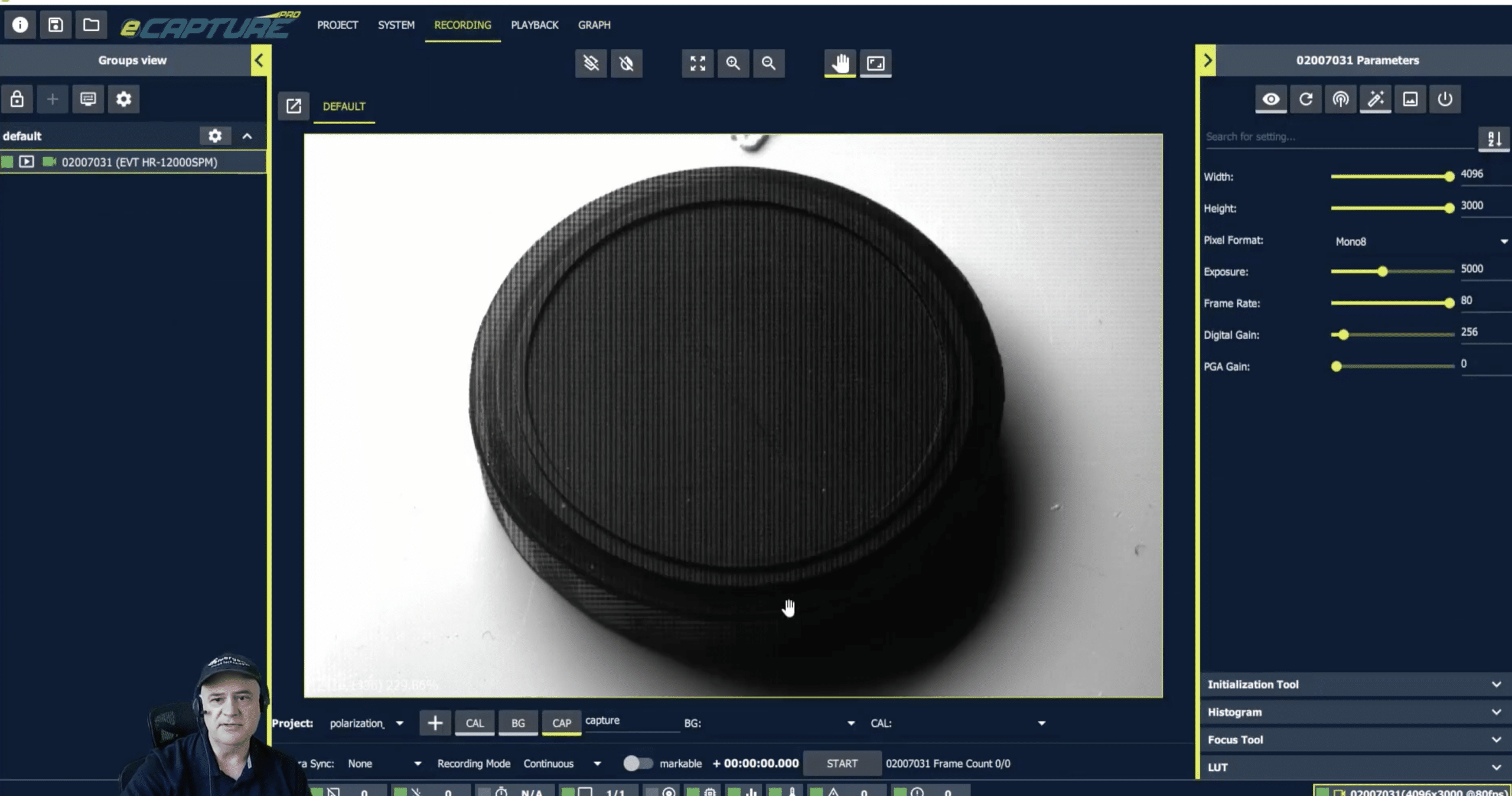

A monochrome HT-20000 camera by Emergent Vision Technologies using a 28mm ZEISS Distagon lens is positioned 70 cm above the surface of the tank. The HT-20000 is a 20 megapixel camera featuring the CMV20000 CMOS sensor by AMS. Its high-speed RJ45 10GBaseT interface offers a cost-efficient solution for many applications and cable lengths to 100 meters.

Infrared and RBG LED strips and a cylindrical retractable light diffuser located around the camera provide homogenous lighting.

Videos are recorded using desktop computers with 2TB in RAID 0 running Windows 10 64bit with the NorPix StreamPix software.

Detection and identification of individuals

Idtracker.ai uses two deep convolutional neural networks (CNN). One to detect when animals touch or cross (deep crossing detector) and a second one to identify animals (identification network).

The system starts the process by capturing image data within a range between 25 and 50 frames per second. This allows the system to collect images belonging to the same individual and organise them in fragments for accurate tracking and identification without overloading the system with redundant image data.

Images representing either single or multiple touching animals are extracted from the video. Each image is labelled as either a single individual or a crossing. Groups of images in subsequent frames of the video in which the same individual (or crossing) is represented are named individual and crossing fragments, respectively. idtracker.ai tracks the individuals by relying on their visual features.

“The main challenge for us was to accurately track each of the individuals. We wanted to record a large group of juvenile zebrafish with a density of animals low enough to allow the group to express a different range of behaviours. Hence, we needed to cover a big area in proportion to the actual size of the animals. This is when having a high resolution camera came handy. With the 20 megapixels of the HT-20000-M we were able to cover a wide area and still have enough pixels per animal for idtracker.ai to work.“, says Francisco Romero-Ferrero, PhD student at Collective Behaviour Lab, Champalimaud Research.

A subset of the collection of individual fragments, in which all the individuals are visible in the same part of the video is then used to generate a data set of individual images labelled with the corresponding identities. This data set is then utilized to train a second CNN to classify images according to their identity (identification network).

The information gained from the first data set of labelled images will allow either to accurately assign the entire collection of individual fragments, or to increase the first data set by incorporating safely identified individual fragments throughout the video. Finally, trivial identification errors are corrected by a series of post-processing routines, and the identity of the crossing fragments is inferred in a last computational core.

FOR FURTHER INFORMATION:

HT-20000 10GigE Camera with AMS CMV20000:

https://emergentvisiontec.com/products/ht-10gige-cameras-rdma-area-scan/ht-20000/

Full idtracker.ai Research

Website: https://idtrackerai.readthedocs.io/en/latest/

Research paper: https://www.nature.com/articles/s41592-018-0295-5