DEPLOYING MACHINE VISION FOR AUTOMOTIVE PARTS INSPECTION AND SORTING

Machine vision systems have long served the automotive industry in nearly all aspects of the production process, improving upon quality control, and streamlining manufacturing processes. Because of the extremely customized nature of automotive manufacturing, machine vision inspection systems must be designed to uncover several different types of defects within a single subassembly.

Machine vision technology provides compelling advantages over human inspection, especially when quality must be assured within the overall automotive manufacturing inspection process. Pairing machine vision cameras and technologies with robots creates a system capable of sorting and inspecting components in the presence of positional uncertainty and confusing backgrounds. One such example, which works especially well for small components, is a multi-camera/multi-pose inspection station in a vision-guided part picking process from a flexible feeder with close-range inspection in a dedicated rig.

Applications

AUTOMATED INSPECTION > MANUAL INSPECTION

Let’s begin with a simple, single automotive part that will be automatically inspected for surface defects: the automotive headlamp lens1. In many manufacturing facilities, headlamps are subjected to a manual inspection by an operator that examines them under special lighting conditions, looking for defects that are greater than 0.5mm.

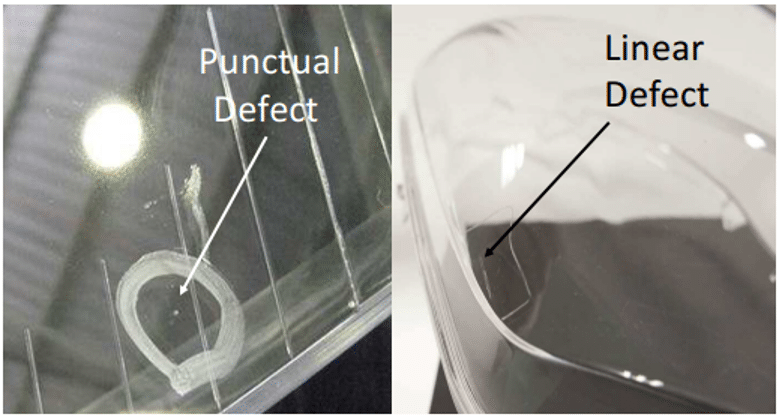

Defects that have the same optical properties as a flawless surface of the lens will be more difficult to detect. Fig. 1

Fig. 1 This image shows some defective headlamp lenses (Image courtesy of Reference 1)

Major drawbacks of a manual inspection process are the increased time invested as well as the necessity of dealing with a wide range of lens models. To be more efficient in a real industrial production environment, a manufacturer needs:

- Reliable detection of defects

- Real-time inspection within a specified time period

- A system that is adaptable to the inspection of different lens models

MACHINE VISION INSPECTION OF SMALLER AUTOMOTIVE COMPONENTS

In order to ensure inspection of the entire headlamp lens, while considering the smallest defect size and also the cycle time, the machine vision system must employ multiple cameras. For instance, the inspection system highlighted in Reference 1 has nine machine vision cameras with a capability of changing the pose of the lenses with respect to those cameras.

Vision-guided robot systems for small items, especially those with a metallic reflective surface, will present a challenging quality control task. One key example of small automotive components is KArtridgeTM air brake couplings. These couplings connect tubes within the vehicle braking system. Kongsberg Automotive AS has a multi-material product coupling made of composite, metal, and rubber. A very important component in these couplings is the star washer, which is a general-purpose metallic piece that secures the grip between a coupling and its housing.

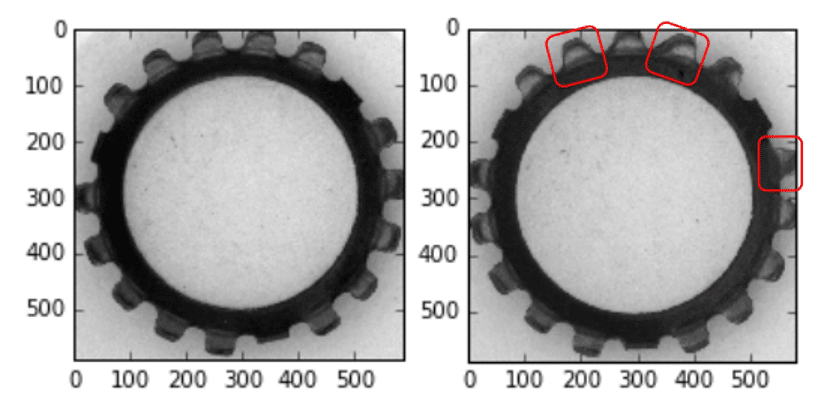

Fig. 2 shows excellent examples of both a good and a defective star washer.

Fig. 2 This image demonstrates a good star washer (on the left) and a defective star washer (on the right) with various defects in the teeth geometry. (Image courtesy of Reference 2)

Machine vision systems will experience challenges due to the natural reflectivity of the star washers’ metallic surface, along with batch-to-batch color variability. The small size of these kinds of components will make part inspection tricky for the cameras and the lighting setup.

The machine-driven tasks will be:

- Identifying star washers on the feeder mechanism

- Classification of the star washer orientation

- Segmentation of the star washer’s teeth for inspection at close-range

AUTOMOTIVE INSPECTION CAMERAS

Emergent Vision Technologies has an extensive array of camera options for automated inspection and sorting, including 10GigE, 25GigE, and 100GigE cameras ranging from 0.5MP to 100MP+. These cameras all have an array of frame rates as high as 3462fps at full 2.5MP resolution to suit diverse imaging needs.

Certain machine vision cameras, such as Remote Direct Memory Access (RDMA) cameras, are quite well suited for automotive parts inspection. This technology enables the movement of data between devices on a network with no CPU involvement on a per-packet basis. Cameras leveraging GPUDirect technology also enable the transfer of images directly to GPU memory, which can be done using Emergent eCapture Pro software. Deploying this technology delivers zero CPU utilization and zero memory bandwidth imaging with zero data loss.

MACHINE VISION SYSTEM CELL ARCHITECTURE

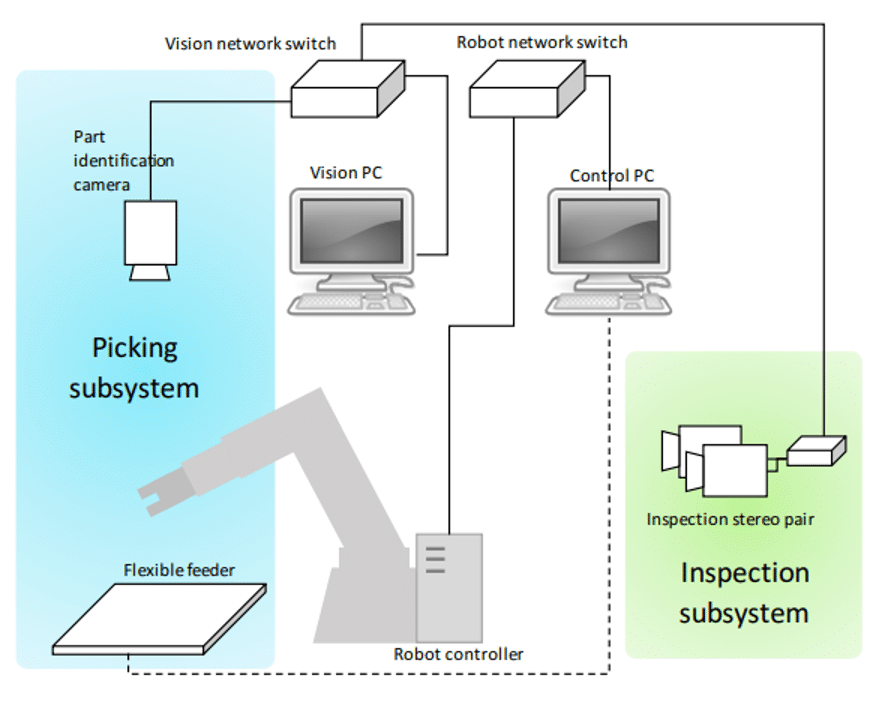

Fig. 3 This image shows the communication architecture of the demonstrator cell with an Adept Viper s850 Robot Controller (Image courtesy of Reference 2)

For part picking and manipulation, an Adept Viper s850 robot was selected for the system shown in Figure 3. Interfacing with the robot controller and robot programming is achieved via a separate desktop environment, with the Adept Desktop version 4.2.2.8, which can be installed on a dedicated PC. The programming is achieved using the proprietary V+ language.

Part feeding was done using an Anyfeed SX240 flexible feeder. This feeder is designed for random feeding of the parts and can be coupled with a vision system for pose estimation (a computer vision task where the goal is to detect the position and orientation of a person or, in this case, an object). The feeder can communicate with external systems using an RS-232 communication interface. The picking surface of the feeder also has backlighting, which will further simplify image processing and parts identification.

GPUDIRECT: ZERO-DATA-LOSS IMAGING

Instead of leveraging proprietary or point-to-point interfaces and image acquisition boards, Emergent uses the GigE Vision standard and the ubiquitous Ethernet infrastructure for reliable and robust data acquisition and transfer, with best-in-class performance. Emergent deploys an optimized GigE Vision implementation and, with support for direct transfer technologies such as NVIDIA’s GPUDirect, which enables the transfer of images directly to GPU memory. The technology mitigates the impact of large data transfers on the system CPU and memory, and instead, utilizes more powerful GPU capability for data processing, while maintaining compatibility with the GigE Vision standard and interoperability with compliant software and peripherals.

PATTERN MATCHING

The below video shows how easily one can create and prototype an algorithm to perform high quality pattern matching while only writing your custom GPU Cuda code.

INFERENCE

The below video shows how easily one can add and test their own trained inference model to perform detection and classification of arbitrary objects. Simply train your model with PyTorch or TensorFlow and add this to your own eCapture Pro plug-in. Then instantiate the plug-in, connect to your desired camera and click run—it does not get easier than this.

With well trained models, inference applications can be developed and deployed with many Emergent cameras on a single PC with a couple of GPUs using Emergent’s GPUDirect functionality – nobody does performance applications like Emergent.

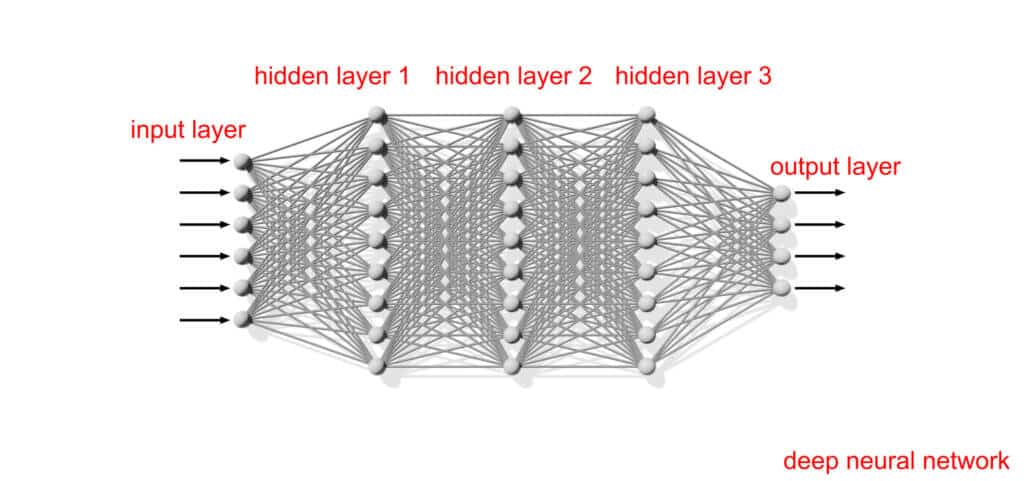

Fig 4: Modeled after the human brain, neural networks are a subset of machine learning at the heart of deep learning algorithms that allow a computer to learn to perform specific tasks based on training examples.

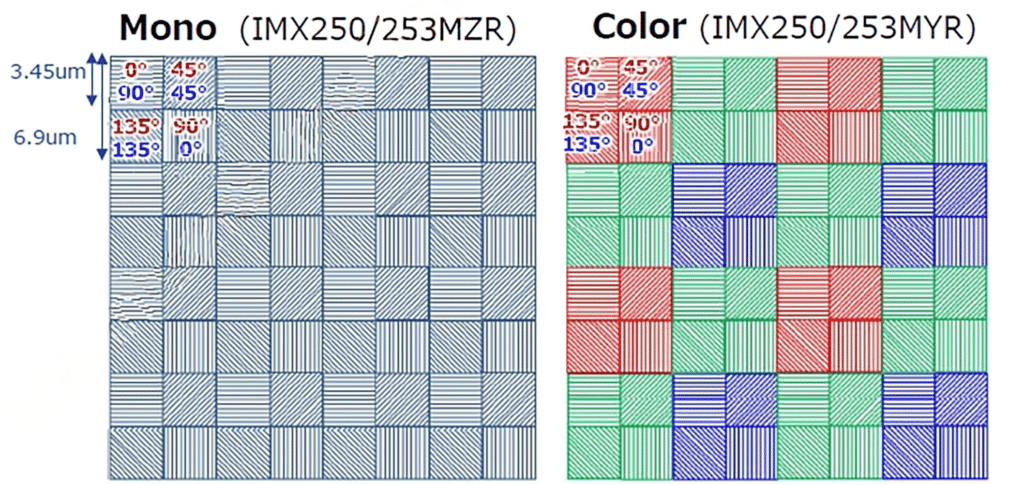

POLARIZATION

Fig 5: In Sony PolarSens CMOS polarized sensors, tiny wire-grid polarizers over every lens have 0°, 45°, 90°, and 135° polarization angles in four-pixel groups.

EMERGENT GIGE VISION CAMERAS FOR PARTS INSPECTION APPLICATIONS

| Model | Chroma | Resolution | Frame Rate | Interface | Sensor Name | Pixel Size | |

|---|---|---|---|---|---|---|---|

|

HR-5000-S-M | Mono | 5MP | 163fps | 10GigE SFP+ | Sony IMX250LLR | 3.45×3.45µm |

|

HR-5000-S-C | Color | 5MP | 163fps | 10GigE SFP+ | Sony IMX250LQR | 3.45×3.45µm |

|

HR-25000-SB-M | Mono | 24.47MP | 51fps | 10GigE SFP+ | Sony IMX530 | 2.74×2.74μm |

|

HR-25000-SB-C | Color | 24.47MP | 51fps | 10GigE SFP+ | Sony IMX530 | 2.74×2.74μm |

|

HB-65000-G-M | Mono | 65MP | 35fps | 25GigE SFP28 | Gpixel GMAX3265 | 3.2×3.2µm |

|

HB-65000-G-C | Color | 65MP | 35fps | 25GigE SFP28 | Gpixel GMAX3265 | 3.2×3.2µm |

For additional camera options, check out our interactive system designer tool.