Case Study: 3D Scanning System Brings Digital Characters to Life

Advancements in visual effects (VFX) have led to a digital transformation in the entertainment industry and changed the way we consume media. Bringing these digital characters, objects, and environments to life in movies, television shows, and video games today starts with the deployment of a high-speed 3D digital capture system.

Several challenges may emerge when using 3D head-scanning systems for the VFX pipeline, however. Techniques such as laser scanning or structured light will require a subject to hold still — which can slow down an entire film production — and may require the removal of specular components from a captured image after the fact. In addition, complex systems require professional electrical and mechanical engineers to set up. To address these issues, turnkey photogrammetry scanning company Pixel Light Effects leverages high-speed, high-resolution GigE Vision cameras in its 3D digital capture system to enable the creation of true-to-life, accurate volumetric scans for the VFX pipeline.

Avoid the Uncanny Valley

Thirty-two years ago, James Cameron’s Terminator 2 emerged as a watershed moment in VFX, reportedly representing the first time a lead character in a feature film was created in part using computer graphics. While the effects still hold up, especially for the time, the technology has far surpassed that of the 1991 sequel. (See Cameron’s 2022 Avatar: The Way of Water, for instance.) Still, for all the well-executed examples of high-quality VFX that exist, many on the other end of the spectrum do as well. Think of the digital removal of Henry Cavill’s mustache in the 2017 Justice League or the unfortunate uncanny valley effect that 2004’s Polar Express imparted on many viewers.

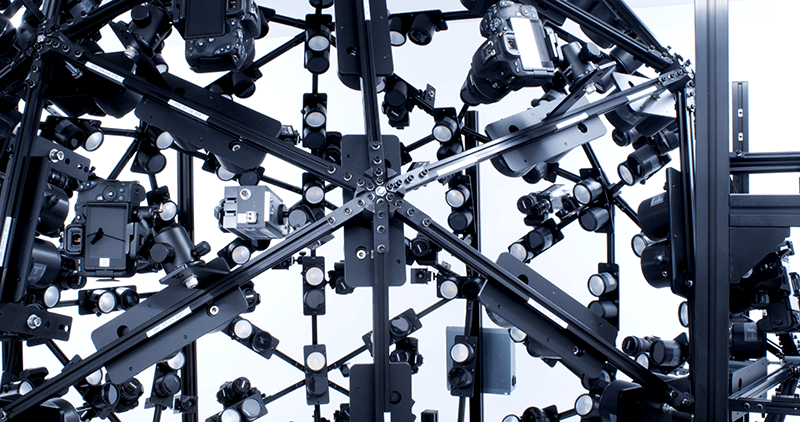

Bad VFX can ruin a movie, while great effects deliver the realism needed to keeper viewers engaged in the movie-watching experience. For Pixel Light Effects — whose recent projects include Prey, Deadpool 2, War for the Planet of the Apes, Altered Carbon, The Chilling Adventures of Sabrina, and Once Upon a Time — this means deploying what it calls 4D scanning (or “3D with time”) volumetric capture techniques. With 4D scanning, which is used for 3D character animation company Reallusion, each frame acquired is a 3D model with a texture map. Once the 12 x 12 foot head-scanning rig is installed and set up (Figure 1), the cameras are calibrated and shooting parameters are stored in the company’s proprietary software and synchronized in each startup sequence. Built-in parameters also enable the changing of shooting parameters such as camera settings, frame rate, LED brightness, lighting sequence, and more.

Figure 1: A replica dinosaur skull is placed inside of the 3D digital capture system for scanning.

Shutters and LED strobes are accurately hardware synchronized to the camera settings. The system uses 142 linearly polarized LED strobes at 60fps to ensure light intensity without drawing an excessive amount of power or being too bright for the talent. These lights create a diffused lighting environment for texture acquisition, as well as showing wrinkles on a face. Frames from 32 machine vision cameras are streamed into a custom-configured data server with either a high-speed solid-state drive (SSD) or large amounts of RAM, with the data then going to a graphics processing unit (GPU) for encoding.

Ditching Digital Doubles

Each frame is constructed by 32 images, allowing the system to track the mesh sequence in Wrap4D for consistent topology. The final output of the system is typically used in two different ways for animation, according to Jingyi Zhang, CEO of Pixel Light Effects.

“First, the data can be used as rigging references to study how faces deform between expressions, allowing CGI artists to extract ‘in-betweens’ from a continuous mesh to add extra blend shapes in their face rig,” he says. “In addition, the system delivers the ability to do performance capture in 4D, where the captured sequence can be overlaid with rigged animation to verify the performance.”

When it comes to avoiding the mistakes of past movies and taking viewers out of the experience, Pixel Light Effects captures human actor performance and creates digital doubles using 4D technology, then extends the performance of the actor into 3D digital space.

Figure 2: Pixel Light Effect’s 3D scanning system leverages high-speed, high-resolution cameras with zero-data-loss to deliver higher quality digital doubles.

“A major difficulty in facial animation is capturing the right feeling or mood, as subtleties can get lost in the motion capture and animation process,” Zhang says. “We help direct the talents into the right moods and replicate that acting in a 3D environment.

“We’ve heard many people say that digital doubles are getting so real that we don’t need actors anymore, and we strongly disagree,” he continues. “We rely on the talent and performance of the actors and bring that into CGI so the audience can be immersed in the story and have a better experience.”

A High-Speed, High-Resolution Upgrade

In a previous design, the system used 32x 5MP 10GigE cameras, but the 3D/4D reconstruction result was less than ideal, according to Zhang.

“With the prior iteration, the system struggled to extract geometry details and the low texture quality impacted tracking results,” he says. “Other high-speed, high-resolution cameras on the market at the time were not economical for our application. So when we found Emergent Vision Technologies’ camera, it was a game changer, allowing us to deliver a superior and more accessible solution for our client’s content-creation process.”

Pixel Light Effects first chose the HR-12000-S-C camera, a 10GigE color camera based on the 12MP Sony IMX253 that can reach frame rates of up to 80 fps. Camera benefits include low noise, multi-camera synchronization, and fiber cable lengths from 1 m to 10 km without the need for fiber converters or repeaters. These cameras were chosen due to performance and customer support, says Zhang.

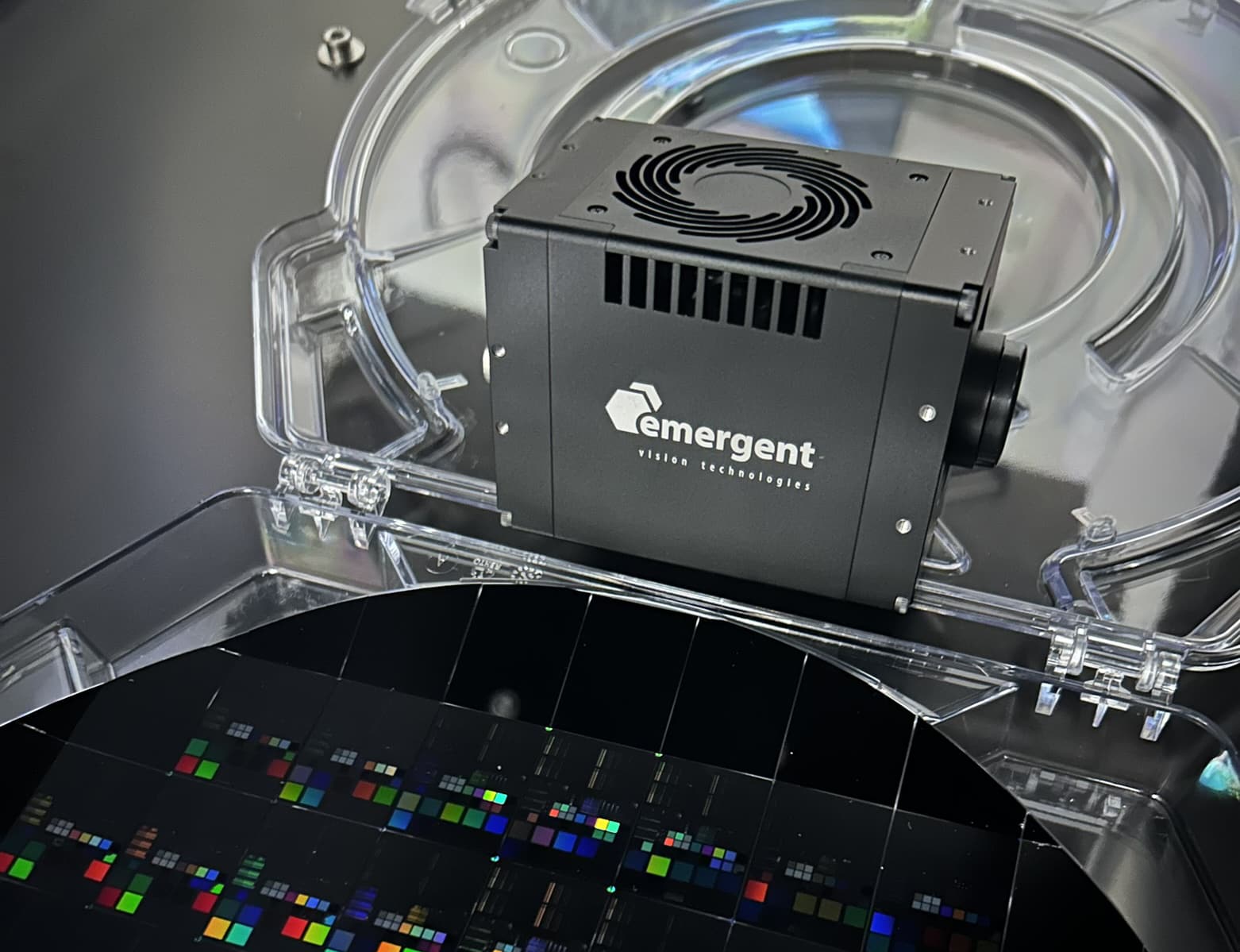

Figure 3: After first deploying 10GigE cameras from Emergent, Pixel Light Effects will install 32x 25GigE cameras with 24.47MP resolution that can reach 98fps.

“Resolution and frame rate are equally important in our systems, but Emergent didn’t just have the cameras we needed, but also the established software development kit and network interface cards to facilitate fast and reliable data transfer,” he says, adding that Emergent offered a knowledgeable technical support team that answered all questions very promptly.

In the new version of the system, Pixel Light Effects will integrate 25GigE HB-25000-SB-C cameras, which are based on the 24.47MP Sony IMX530 and can reach frame rates of 98 fps through the high-speed 25GigE QSFP28 interface.

“Previous designs leveraged the 10GigE interface, but these new 25GigE cameras let us capture data reliably at similar frame rates but at much higher resolution, making our system much more competitive on the open market,” Zhang says.

FOR FURTHER INFORMATION:

Emergent Vision Technologies’ High-Speed Cameras:

https://emergentvisiontec.com/area-scan-cameras/