Tech Portal

Tech Portal

Machine Vision Cameras: How to Choose the Right Camera for Your Machine Vision Application

Thanks to improvements and affordability, machine vision systems have become more useful and accessible for a wide range of applications. A variety of factors, however, must be considered when selecting an industrial camera system.

Knowing industrial camera and optic specifications is critical when trying to select the right camera for a machine vision application. After all, the relationship between sensor and lens enables a camera to capture a properly illuminated image of an object. During an industrial image processing operation, the camera sensor converts light (photons) from a lens into electrical signals (electrons). The resulting signal, typically generated using either a charge-coupled device (CCD) or complementary metal oxide semiconductor (CMOS) image sensor, produces an image consisting of pixels. Processors then analyze the image, which is comprised of dark pixels caused by low light levels and bright pixels resulting from more intense light levels.

While every machine vision inspection project is different, most camera inspection system developers start by defining comprehensive requirements, listing each machine vision task, and collecting a range of sample parts to be imaged. After deciding what must be accomplished — such as OCR (optical character recognition), barcode reading, metrology, obtaining high-quality color images, looking at high speed events, or some other task — the design phase typically starts by selecting an industrial machine vision camera.

Choosing cameras for camera inspection systems is not trivial. The machine vision camera landscape has undergone rapid expansion in recent years, with numerous manufacturers offering a dizzying array of camera models. From line scan, area array, high-speed, high-resolution, and analog to ultraviolet (UV), monochrome, color, NIR, SWIR, IR, multispectral, and hyperspectral, each industrial camera type boasts unique functionalities.

Because choosing the right machine vision camera (or computer vision camera) from the thousands available on the market can be a daunting task, this article aims to ease navigation through this complex environment and help with selection of the optimal camera to address specific needs. This article addresses the key factors that must be considered when selecting a machine vision camera. It not only addresses radiation type, resolution, pixel size, quantum efficiency, frame rate, exposure time, camera size, image transfer speed, and other factors, but also outlines what to look for when it comes to the technologies involved in high-speed machine vision applications such as camera interfaces and their protocols, processing technologies, and software. Finally, it will explain the differences between RDMA-, TCP-, and UDP-based GigE implementations within the context of high-speed, RDMA-ready machine vision cameras available today for such systems.

Application Constraints

Selecting the ideal industry camera requires careful consideration of multiple factors, including its physical dimensions, weight, and power consumption. These constraints can significantly impact options, especially in unique applications. Consider, for example, an autonomous mobile robot (AMR) tasked with moving items through a warehouse. This AMR needs a camera that is small, lightweight, and offers low power consumption to maximize operating time. In this scenario, size and weight limitations become critical decision points.

Environmental factors also play a crucial role in camera comparisons. Will the camera be used outdoors, requiring an enclosure for protection? Or will it operate in a controlled indoor environment? Understanding the environmental conditions will guide selection of camera features suitable for those specific conditions. Subject speed is another factor that can significantly impact camera selection. For capturing rapid movement and freezing the action, high-speed cameras are essential, as they allow for detailed analysis of the subject’s motion.

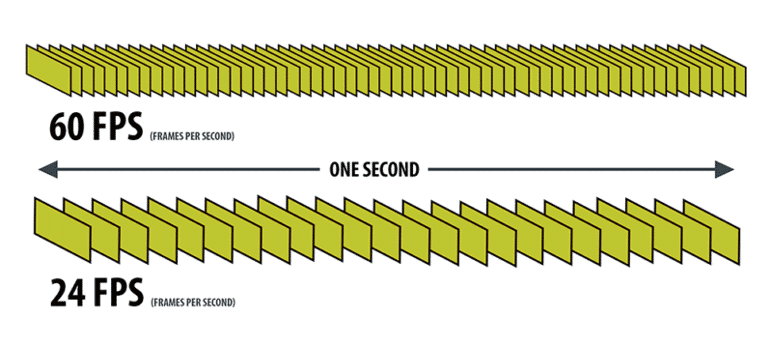

If bright light is limited, consider cameras with high sensitivity to light. These cameras can capture clear images even with minimal available light, making them ideal for confined spaces or low-light environments. Additional factors to appraise in camera comparisons include frame rate, resolution, and dynamic range. Frame rate specifies how many frames the camera captures per second. Higher frame rates are necessary for capturing fast-moving subjects or smooth, slow-motion playback. Resolution determines the image detail and clarity. Higher resolutions capture more detail but require additional image storage space. Dynamic range refers to the camera’s ability to capture detail in bright and dark areas of the scene. Higher dynamic range cameras produce images with more realistic lighting and fewer blown-out highlights or lost shadows.

A Look Inside a Machine Vision Camera

Cracking open a basic machine vision camera reveals its inner workings. Most machine vision cameras can be broken down into two main parts: a set of circuit boards and the image sensor itself. The circuit boards, commonly called back-end circuitry, handle the camera’s functionality logic. They may include A/D (analog-to-digital) converters, amplifiers, clocks for exposure and frame rate, readout circuits, FPGAs (field-programmable gate arrays), memory, and other devices. Think of this circuitry as the camera’s brain, handling tasks such as converting signals, controlling timing, and storing incoming images.

The machine vision camera’s frontend is essentially the imaging sensor. The image sensor is the eye of the camera, capturing light and generating the image. The image sensor comes in two main flavors: visible light sensors (silicon CCD or CMOS) and infrared sensors (using various other materials). While this discussion is applicable for selecting both visible and infrared light cameras, it will not cover the many other types of sensors such as cooled and uncooled infrared sensors made of a wide variety of different materials. Instead, we’ll focus on the visible light sensors, which are like silicon sponges soaking up light and turning it into digital pictures.

Electromagnetic Radiation

The human eye, like a tiny window to the world, only lets in a sliver of the grand electromagnetic spectrum. Luckily, cameras have become powerful extensions, allowing sight far beyond the limitations of vision. Imagine a vast cosmic light show, where each band of the spectrum plays a unique role. Each band in the spectrum has a specific wavelength, and these wavelengths dictate what’s visible.

Think of wavelengths like a ruler for the universe. Shorter wavelengths, like those in ultraviolet or X-rays, can resolve incredibly tiny details, like the intricate patterns on a butterfly’s wing or the delicate structure of a virus. On the other hand, longer wavelengths such as infrared struggle to distinguish fine details. They’re great for detecting heat signatures, revealing the presence of humans, or even a hot cup of coffee in the dark. But for zooming in on microscopic structures, they fall short.

Specific applications necessitate specialized cameras. For instance, certain machine vision and imaging functions demand cameras with sensitivity surpassing the visible light spectrum. Alternatively, some tasks require cameras equipped for examining highly reflective materials like glass. For systems integrators seeking area-scan cameras with sensitivity beyond the spectrum of visible light while also operating at high speeds and high data rates, Emergent Vision Technologies offers 10GigE and 25GigE multispectral cameras with ultraviolet sensitivity, as well as 10GigE cameras with polarized image sensors with Sony CMOS sensors.

Both the 25GigE Bolt HB-8000-SB-U and the 10GigE HR-8000-SB-U utilize Sony’s 8.1 MP UV Pregius S IMX487 CMOS image sensor, offering heightened sensitivity within the UV spectrum spanning 200 nm to 400 nm. The IMX487, a 2/3″ CMOS sensor with global shutter functionality, efficiently captures UV light. Employing a back-illuminated pixel structure on a stacked CMOS sensor like other Sony Pregius S sensors, it delivers undistorted, rapid imaging capabilities.

The 25GigE HB-8000-SB-U achieves a frame rate of 201 fps, whereas the 10GigE HR-8000-SB-U achieves 145 fps. These GigE Vision cameras, compliant with GenICam standards, are designed for multispectral imaging applications, including semiconductor inspection, waste plastic sorting, high-voltage cable inspection, printing inspection, high-resolution microscopy, and luminescence spectroscopy.

So, how do cameras capture images of these visible and invisible worlds? The journey begins with electromagnetic radiation, commonly known as light. As light reaches a pixel, it triggers the generation of a free electron. The goal in imaging is to convert photons into electrons, retained within the pixel acting akin to a capacitor. As more light continues to strike, additional free electrons are produced and stored as charges within these pixels until they’re eventually retrieved during the readout process.

Full Well Capacity: How Much Light Can a Pixel Handle?

Ever seen a bright object bleed its light into surrounding areas in an image? That’s called blooming, and it happens when a pixel gets overloaded with light – like an overflowing bucket. The maximum amount of light a pixel can handle before blooming is called its full well capacity (FWC). It’s like the size of that bucket, measured in tiny charged particles called electrons. When the pixel is totally saturated and can hold no more charge, it is said to have reached its FWC.

Data sheets typically define the FWC of a sensor in terms of how many electrons it takes to reach that state. For example, a sensor’s FWC may be given as 20,000 electrons. If camera company datasheets do not include the FWC for a given camera, the camera company might list which sensor it uses. If not, ask the camera company and get the image sensor datasheet from the foundry, which should specify the FWC. Knowing the FWC is crucial for different applications:

Low-light Imaging: In dim conditions, each photon counts. A sensor with a high FWC can capture faint details even with longer shutter speeds, avoiding noise and grain and allowing for clearer images even in darkness.

High Dynamic Range Imaging: High FWC sensors enable cameras to capture a wider range of light intensities without clipping bright highlights (blown-out whites). Think cameras used in autonomous vehicles that have to navigate through a dark tunnel as well as emerge safely into a cityscape with bright sun and deep shadows. A high FWC sensor can hold onto the details in both extremes without clipping, creating richer, more realistic images.

Scientific imaging: Accurate FWC values are vital for precise measurements in research applications. Capturing the Milky Way’s delicate glow or distant galaxies requires a sensor that can handle the vast range of light levels in the night sky. For applications like astronomy or medical imaging, where capturing faint signals is crucial, a high FWC sensor is essential.

Sensor circuits decide when to allow this light to start creating electrons, which is called integration, and when to stop. The electrical circuitry turns the pixel on or off, starting and stopping voltage generation proportional to the amount of charge accumulated in each well. Finally, the signal can then be read out from the pixels in a linear row at a specified line rate, or at a specified frame rate when reading out an entire area array of pixels.

Quantum Efficiency and Signal/Noise Ratio

The pixels’ ability to convert photons to electrons is called its quantum efficiency (QE). As photons enter the pixels, they generate electrons. Simultaneously, however, heat and other factors also generate some electrons, which is called noise. The goal for each pixel is to have more electrons generated from light than from heat and other factors. In other words, image sensors with more signal (electrons from light) than noise (electrons from heat and other factors) have a high signal-to-noise (S/N) ratio.

Image sensors with a high S/N ratio, low noise, and large FWC will have a large dynamic range. Dynamic range is the ratio of measured maximum to minimum charge. During the readout, analog voltage to digital number conversion, or A/D, ensues. For example, if a camera’s back-end circuitry has the capability of providing an 8-bit analog to digital conversion, it provides a potential of 256 (or 28) levels. If the pixel charge read out is 0, it has no electrons and is black. If the pixel is fully saturated and at its maximum charge, it’s white and has a count of 255.

The A/D determines how many grayscale divisions exist. A low S/N means a lot of signal is lost in the noise, down in the black. For example, it would be difficult to differentiate a feature on an object that only slightly contrasts from its background and surrounding parts. However, a 10-bit A/D is 210 = 1024. If there’s a subtle grayscale difference between the feature of interest and the surrounding area, a greater number of grayscale divisions will increase the likelihood of being able to differentiate the feature.

Pixel Size

Larger pixels often hold the advantage in image quality. Compared to smaller pixels, in general, larger pixels boast higher S/N ratio and dynamic range thanks to their bigger “buckets” for collecting electrons. More surface area also translates to capturing more electrons. However, this benefit comes at a price: larger pixels inflate the cost of the sensor. Why are larger sensors pricier? Think of the silicon wafer, where multiple sensors are etched. The cost of producing this wafer gets divided among all the sensors it yields. Smaller pixels allow for more sensors on a single wafer, effectively lowering the cost per sensor. This is why smaller sensors are significantly cheaper than their larger counterparts.

Beyond the ideal scenario, factory yield also plays a crucial role. Not every sensor manufactured is perfect, and the smaller production volume of large sensors amplifies the impact of defects. A few bad pixels in such a limited batch can significantly reduce the usable number of sensors. Therefore, choosing the “biggest and best” isn’t always the most sensible approach. While larger pixels offer performance advantages, smaller ones can be a cost-effective alternative, especially when size constraints or budgetary concerns come into play.

Imager Size

Imager size plays a crucial role in camera performance, but the naming conventions can be confusing. Terms such as “quarter-inch” and “half-inch” might seem outdated, especially when the actual dimensions are in millimeters. So, where do these terms come from, and what do they really mean? These terms hark back to the era of film photography. Standard film sizes (8mm, 16mm, and 35mm) used apertures of specific diameters. Camera lenses were designed to project an image circle onto the film within these apertures.

These terms simply represent the approximate diagonal dimensions of the image sensors, referencing the equivalent film format. For instance, a half-inch sensor roughly corresponds to the diagonal of a 16mm film frame. While the naming convention originates from film, the actual sensor sizes are measured in millimeters for precision. This allows for more accurate comparisons and compatibility calculations with lenses and other camera components.

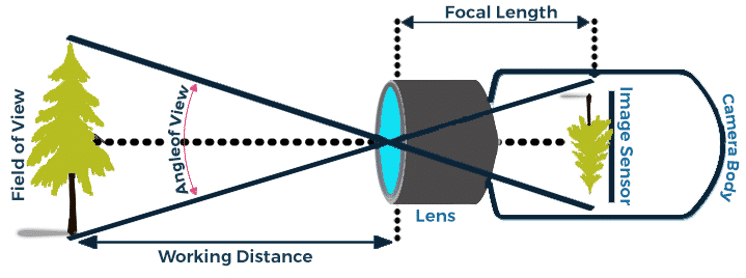

Sensor Diagonal Size

The most important dimension for choosing a lens is the sensor’s diagonal size. This determines the minimum diameter of the image circle the lens needs to project to cover the entire sensor. Cameras often specify sensor sizes using these familiar “quarter-inch” to “one-inch” terms, but it’s important to consult the manual or manufacturer’s website for the actual diagonal measurement in millimeters.

The lens needs to cast an image circle that completely covers the sensor’s diagonal. The sensor’s diagonal dimension directly influences the lens’ required image circle. A one-inch sensor demands a lens with at least a one-inch image circle to avoid vignetting or incomplete image capture. While larger lenses can be used, their image circle might exceed the sensor area, leading to wasted resolution potential. Conversely, using a smaller lens on a larger sensor illuminates only a central portion, reducing field of view.

In metrology applications, where precise measurements are paramount, intentionally oversizing the lens relative to the sensor can be advantageous. This leverages the lens’ high-performing central region for optimal detail resolution. Choosing a lens with a smaller image circle will lead to vignetting (dark corners) or even cropped images. Conversely, a much larger image circle than necessary might be overkill and affect performance or cost.

Understanding sensor size and its relation to lens compatibility is crucial for informed camera selection. Don’t just rely on the “quarter-inch” or “half-inch” labels; always check the diagonal measurement and ensure the chosen lens can cover the sensor adequately. However, if a lens compatible with the specified camera is unavailable, the designer may have to choose a different camera.

Microlenses Improve Smaller Pixel Performance

Ever wonder how camera sensors keep getting smaller and more powerful while still capturing stunning detail? Part of the magic lies in tiny lenses called microlenses perched on top of each pixel. Imagine a cross-section of a camera sensor. At the bottom sits the silicon wafer, where the pixels reside. These tiny light-sensitive areas are like buckets, collecting photons to create the image you see. Above the pixels lies a layer of intricate metal wiring. These “veins” carry electrical signals that control each pixel, telling them when to start and stop collecting light and, ultimately, how to translate that data into an image.

For color cameras, a special layer of red, green, and blue filters sits on top of the pixels. These filters act like tiny gates, allowing only specific wavelengths of light through, creating the color information for each pixel. Now, imagine light rays entering the sensor at various angles. Some might miss the tiny pixel buckets and escape capture. Enter the microlens! These miniature lenses sit atop each pixel, like tiny magnifying glasses. They bend and focus light rays, ensuring that even those entering at an angle reach the light-sensitive area.

Capture More Light

Microlenses significantly increase the amount of light captured by each pixel, leading to two things: enhanced sensitivity and improved resolution. With enhanced sensitivity, sensors can capture more detail, even in low-light conditions. Improved resolution ensures that more light translates to sharper, clearer images with finer details. However, this performance boost comes at a cost. Microlenses are permanent fixtures, bonded to the sensor. While they boost overall light capture, they can also block UV light.

Some microlenses are made of materials that absorb UV light, limiting the sensor’s ability to capture certain types of information. Microlenses also limit lens flexibility. The fixed nature of microlenses restricts the use of certain lens types that might require direct interaction with the sensor surface.

Microlenses vary in size and shape depending on the specific sensor and its intended use. Advances in microlens technology are ongoing, with researchers exploring new materials and designs to overcome current limitations.

Microlenses are a crucial innovation in sensor technology, enabling smaller, more efficient sensors without sacrificing image quality. But their permanent nature and potential limitations in UV sensitivity and lens compatibility are important factors to consider. The choice between a sensor with or without microlenses depends on your specific needs and priorities. For low-light conditions or applications requiring high resolution, microlenses offer significant advantages.

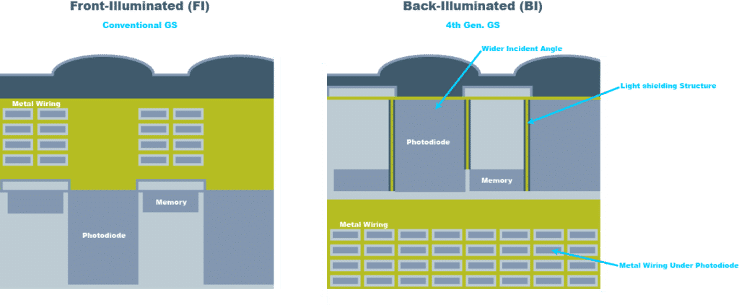

Back-Illuminated Sensors

The quest for capturing more light and achieving stunning image quality in compact sensors has led to exciting innovations. One such advancement is the back-illuminated sensor with microlenses. This technology flips the script on traditional sensor design, offering several advantages. Imagine a sensor’s cross-section. Typically, microlenses sit atop the pixels, gathering light and directing it through layers of metal wiring before reaching the light-sensitive areas. In back-illuminated sensors, the roles are reversed. Microlenses are integrated with the color filters on the back of the sensor, allowing light to enter directly into the pixels without traversing the metal wiring.

Allowing the photons to enter the backside of the sensor greatly improves S/N. The benefits include increased light capture, improved sensitivity, and enhanced dynamic range. Eliminating the obstacle of metal wiring significantly boosts the amount of light reaching each pixel, resulting in improved sensitivity for capturing sharper images in low-light conditions and enhanced dynamic range for improved capture of details in bright and dark areas of a scene.

Back Thinning Wafers

To effectively implement this back-illuminated sensor technology, the silicon substrate needs to be thinned to allow light to pass through with minimal absorption. However, wafer thinning is a delicate process, often considered a “secret recipe” in foundries. It happens after the entire sensor has been fabricated, making any mistakes costly. Imagine transitioning from the controlled world of gaseous vacuum thin-film processing to the messy realm of mechanical lapping with a slurry. This unconventional approach, akin to polishing a diamond, might seem unusual in a semiconductor foundry, but it’s crucial for achieving the desired thinness. Yet there are several challenges and trade-offs.

While back-illuminated sensors with microlenses offer significant advantages, there are still some challenges including UV sensitivity limitations and increased complexity and costs due to the additional processing steps and specialized techniques involved in manufacturing these sensors. Despite these challenges, back-illuminated sensor technology with microlenses represents a major leap forward in sensor performance.

However, since back-illuminated sensors have become more common in phone cameras, significant increase in research and development has led to further improvements in efficiency, sensitivity, and UV sensitivity, paving the way for even more stunning and versatile imaging solutions. The latest CMOS sensors use specific microlens design and materials in back-illuminated sensors that can further optimize UV sensitivity. Alternative thinning techniques have also helped improve yield and reduce costs.

While technologies such as electron-multiplying CCDs (EMCCDs) and time-delay integration (TDI) offer even higher sensitivity, they are more complex and fall outside the scope of this discussion. Nonetheless, it’s important to know that various techniques are available that can significantly enhance low-light performance in specific camera types.

Rolling vs. Global Shutter Sensors

All cameras are not created equal. The industry offers two choices: rolling and global shutters. When it comes to capturing high-speed objects or web processes, sensor choice is crucial. In general, choosing the right sensor depends on specific application constraints such as speed and precision.

A rolling shutter is like a fast scanner, reading lines of data one after another. Rolling shutter sensors are great for many everyday imaging tasks and capturing still images. But for high-speed movements or precise measurements, objects in the image may get distorted.

A global shutter can be thought of as a camera capturing the entire scene at once, like a snapshot, which is perfect for capturing fast-moving objects without distortion. Global shutter sensors are ideal for scientific applications and accurate measurements.

Line Scan Cameras

Ever wonder how cameras capture images of things moving on conveyor belts? Line scan cameras are one way to accomplish this task. Line scan cameras use a linear 1D image sensor to capture a single line of the image at a time, from which it builds up a 2D image for analysis. Line scan cameras are often used to facilitate the inspection of moving objects passing by. Think of these cameras as a laser beam, scanning a single line at high speed. Line scan cameras are ideal for web processes like inspecting products on conveyor belts. Line scan cameras build a complete image line by line. To do this, they must be synchronized with the object’s movement.

Here’s how it works: Imagine a conveyor belt with a package moving along the top of it. A line scan camera has a long, thin sensor that when equipped with the appropriate lens, will capture an image spanning the width of the conveyor, and captures a single line of pixels at a time. If the package isn’t moving, the camera only captures a single line of the image, making it difficult to discern very many details on the package. However, as the package moves, the camera captures line after line, building up a complete image.

When the line scan camera is triggered in sync to the motion, an image can be produced for as long as needed. This gives line scan cameras the ability to cost-effectively capture high-resolution images of large objects. In addition to large, moving objects, line scan cameras can also be used to image rotating cylindrical objects. If motion is consistent, or a speed sensor or encoder can be used, then a line scan camera may be cost-effective.

Emergent offers a range of line scan cameras in 10GigE, 25GigE, and 100GigE configurations, designed to meet the swift demands of contemporary line scan imaging needs. These camera families assure dependable deployment, providing zero-copy and zero-data-loss imaging capabilities. The lineup includes various models, starting from the LR-4KG35 10GigE line scan camera, capable of reaching speeds of 172KHz, to the LZ-16KG5 100GigE line scan camera, which achieves a rapid 400KHz.

Cost-Effective High-Resolution Imaging

Line scan cameras offer a surprising advantage in terms of cost. They achieve this by packing more pixels onto a single wafer compared to area array cameras. Because line scan technology only captures a single line at a time, it allows for denser pixel placement, which translates to more pixels per wafer. Line scan sensors are commonly available with 2000, 4000, even 16,000 pixels. Comparable area arrays would be gigantic and incredibly expensive.

In general, a 1K line scan sensor is less expensive than an a 1K area array due to the higher packing density, which results in a lower cost per pixel. If the application involves high-resolution imaging of moving objects (e.g., conveyor belts) and the movement can be controlled, line scan cameras are a cost-effective option. Line scan cameras are also capable of capturing color information.

However, there are certain limitations associated with a line scan camera. Lighting needs to be precise, and the camera aperture must stay open most of the time, which reduces the depth of field, making it harder to capture objects at different distances from the camera.

Area Scan Cameras

While line scan cameras shine when objects move at a consistent speed, allowing for precise image capture and efficient image processing, area scan cameras are better for stationary objects and scenes with unpredictable movement and greater depth of field requirements. Area scan cameras with area scan sensors have a large matrix, or array, of pixels that produces a 2D image in a single exposure.

Compared to line scan cameras, area scan cameras typically require less light, and lighting is usually easier to install because of the rectangular field of view. Additionally, area scan cameras can capture short exposure images using strobe lighting, which brings large amounts of light to the sensor quickly. The majority of image acquisition applications use area scan cameras.

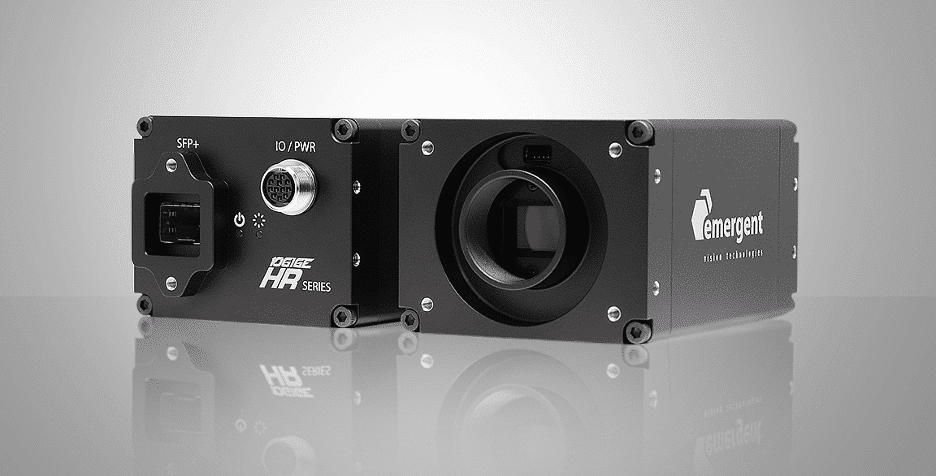

Some applications might necessitate high-resolution cameras, particularly in situations where precise visualization of small details or comprehensive inspection of large parts is essential. To cater to such requirements, Emergent presents an extensive array of high-resolution models spanning various camera families, such as Eros 5GigE, HR 10GigE, and Bolt 25GigE cameras.

These models incorporate advanced IMX sensors from Sony Pregius S series, including:

- 1MP IMX547: HE-5000-SBL 5GigE camera (45.5fps), HR-5000-SBL 10GigE camera (99fps)

- 1MP IMX546: HE-8000-SBL 5GigE camera (36.5fps), HR-8000-SBL 10GigE camera (73fps)

- 4MP IMX545: HE-12000-SBL 5GigE camera (34fps), HR-12000-SBL 10GigE camera (68fps)

- 13MP IMX542: HE-16000-SBL 5GigE camera (26fps), HR-16000-SBL 10GigE camera (52fps)

- 28MP IMX541: HE-20000-SBL 5GigE camera (21.5fps), HR-20000-SBL 10GigE camera (43fps)

- 47MP IMX540: HE-25000-SBL 5GigE camera (17.5fps), HR-25000-SBL 10GigE camera (35fps)

The 25GigE Bolt series also leverages the 5.1MP IMX537 in its HB-5000-SB (269fps), 8.1MP IMX536 in its HB-8000-SB (201fps), 12.3MP IMX535 in its HB-12000-SB (192fps), 20.28MP IMX531 in its HB-20000-SB, and the 24.47MP IMX530 in its HB-25000-SB (98fps). The available options extend to higher resolutions with cameras like the Zenith HZ-100-G (103.7MP Gpixel GMAX32103) and Bolt HB-127-S (127.7MP Sony IMX661).

Interface and connectivity options can also significantly impact camera choice, as does the camera’s ease of use, control options, and available connectivity ports for integrating it with other systems. Beyond the previously discussed factors, several additional considerations can significantly impact your camera selection.

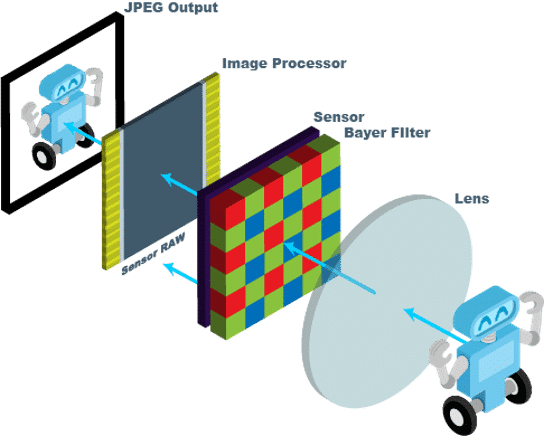

Color vs. Monochrome

While color rendition may be important for certain applications such as medical diagnostics, monochrome cameras often present a viable alternative. In general, monochrome cameras offer several advantages. They typically exhibit higher sensitivity to light than color cameras, allowing for better performance in low-light conditions. In addition, monochrome cameras are generally more affordable than their color counterparts, and monochrome images require less storage space, making them preferable for applications where data storage is limited.

The most common color cameras are single-chip, single-sensor. The prevalent filter pattern employed is known as the Bayer pattern. Within this pattern, red filters cover one pixel, two green filters span two pixels, and a blue filter overlays another pixel. So, for every pixel, there’s one equipped with a red filter, two with green, and one with blue. Two green filters are used because the human eye is more responsive to green wavelengths. This helps the sensor replicate colors faithfully, mirroring how humans perceive them.

These color filters are integral; they’re permanently fixed atop the pixels, making it impossible to remove or alter them. They selectively permit certain wavelengths of light to reach each pixel, thereby generating the characteristic two green, one red, and one blue pattern of the Bayer filter. However, it’s important to note that only about a third of the incoming photons successfully pass through these filters.

Consequently, color cameras generally require more light than monochrome cameras. This is because color sensors capture only a portion of the available light for each pixel, unlike monochrome sensors which capture all of it. While individual pixels are captured accurately, recreating the full color image requires interpolation. Imagine a tiny grid of pixels on your monitor. Each pixel needs a mix of red, green, and blue to represent the actual color.

Demosaicing algorithms analyze the surrounding red, green, and blue pixels to estimate this mix for each pixel, but this process can slightly blur edges and reduce perceived resolution. For precise color accuracy, professional photographers use a Macbeth ColorChecker. This standardized chart contains a range of colors, allowing a comparison of a camera’s captured colors to the reference, which can also be useful in automated inspection camera applications involving correct color identification. Pay particular attention to reds, as they can be tricky for a vision system camera.

Shortwave Infrared (SWIR) and Polarization Imaging

Specific scenarios demand specialized imaging technologies, such as identifying anti-counterfeiting watermarks or security codes on labels or imaging through bottles to ensure precise fill levels. SWIR cameras such as those offered from Emergent Vision Technologies can be reliably deployed for such instances. Emergent’s Eros 5GigE camera series includes models like HE-300-S-I, HE-1300-S-I, HE-3200-S-I, and HE-5300-S-I, equipped with advanced Sony SenSWIR sensors capable of capturing images within the 400-to 1700-nm range. These cameras utilize Sony’s 0.33MP IMX991, 1.31MP IMX990, 3.14MP IMX993, and 5.24MP IMX992 sensors.

Traditional imaging methods encounter challenges in inspecting shiny or reflective materials causing glare. Polarization cameras like Emergent’s HR-12000-S-P,HR-5000-S-P, HE-5000-S-PM monochrome, and HE-5000-S-PC color cameras address these issues by enhancing brightness and color and enabling the detection of minute details often missed by regular sensors. Suitable for scenarios requiring the `differentiation of reflected and transmitted scenes, polarization imaging is useful for identifying surface irregularities such as dirt, raised areas, indentations, scratches, and deformations.

These polarization cameras rely on Sony’s 5MP Sony IMX250MZR (mono) and IMX250MYR (color), as well as 12MP Sony IMX253MZR (mono) and IMX253MYR (color) sensors. These sensors integrate microscopic wire-grid polarizers over each lens, featuring polarization angles of 0°, 45°, 90°, and 135° in four-pixel clusters; this interpolation reduces the sensor’s effective resolution by 4x, with each four-pixel block translating to a single pixel of output.

High-Speed Imaging

If the video is primarily for analysis, prioritize features such as high frame rate to capture fast-moving subjects and enable smooth, slow-motion playback. High resolution provides detailed images for accurate data analysis, and a high dynamic range can capture realistic lighting and avoid losing detail in bright or dark areas. For live video feed, prioritize features such as low latency to stream video with minimal delay for real-time response, as well as efficient data compression to reduce bandwidth usage for smooth streaming.

Recording options can also play a significant role in camera choice. Live streaming with no recording is ideal for applications where real-time analysis is crucial and data retention is not required. However, high-speed recording that can capture fast-moving events for later analysis requires high-performance storage solutions like RAID or solid-state drives. It’s important to remember that higher resolution and frame rate cameras demand more storage space. Compressed recording formats offer smaller file sizes but may sacrifice image quality. For storage, consider RAM, RAID arrays, or solid-state drives depending on data volume and access speed requirements.

As more and more imaging applications extend beyond factory settings, the capacity of GigE Vision to simplify operations becomes even more important for original equipment manufacturers (OEMs). The length of the cable becomes crucial in scenarios where there is a considerable distance between the camera and the PC, such as in surveillance, transportation, and sports technology applications. The accessibility of cost-effective transceiver components like SFP+ (10G), SFP28 (25G), and QSFP28 (100G) allows for the use of single-mode fibers spanning distances of up to 10 km or more.

Zero-Copy Imaging Technique for High-Speed Machine Vision

In the context of GigE Vision, the utilization of transmission control protocol (TCP) or remote direct memory access (RDMA) and RDMA over Converged Ethernet (RoCE) and RoCE v2 has arisen due to a specific challenge. This challenge involves the disassembly of Ethernet packets at the receiver to deliver image data in a continuous format to the application, which requires the separation of Ethernet packet headers. While achievable through software, this process incurs a performance penalty with a threefold increase in memory bandwidth and heightened CPU usage—an aspect often highlighted by RDMA users when comparing traditional GigE Vision and RDMA.

Emergent Vision Technologies have adopted a zero-copy image transfer methodology, an essential requirement for achieving optimal performance in high-speed imaging. This technique significantly reduces CPU load and memory bandwidth by capitalizing on the inherent splitting capabilities present in contemporary network interface cards. An accompanying animation demonstrates the impact of zero-copy memory bandwidth usage when employing the refined GigE Vision Stream Protocol (GVSP) for zero-copy image transfer. The initial segment of the animation illustrates an unoptimized system experiencing buffer overflow in the NIC, whereas the subsequent segment showcases the smooth and reliable flow of data facilitated by zero-copy and system optimization.

Budget

Cost plays a vital role when deciding which camera to purchase and can be just as important as resolution and speed. It is highly likely that at this point, the decision to purchase an industrial camera for inspection or for another machine vision task has already been made based on the cost-effectiveness and return on investment (ROI) of the machine vision inspection system.

When it comes to machine vision systems, cost determines what can be achieved, and the camera budget will be determined based on these assessments. Although inspection at a very granular level of detail might be appealing, it could also be prohibitively expensive. Camera purchasers must weigh the cost against the ROI. Working backward from cost is a good starting point. It forces prioritization of truly essential features for the application and ensure financial feasibility.

Because cost is a significant factor in choosing the right camera, features and specifications should be prioritized based on budget constraints. These considerations help narrow down the field of cameras that will be suitable for a specific application. Prioritizing each constraint and evaluating them against the budget will lead to a select few cameras best suited for the given project.

Prioritize Application Constraints

In general, it’s best to start by considering the size of the object to be scanned and the smallest possible feature that needs to be differentiated on that object. The smaller the pixel, the smaller the detail that can be resolved. There’s a wide selection of cameras with a range of specifications to choose from, such as line scan cameras up to 16K, area arrays of 80 MP or higher, all the way to high-speed cameras that capture thousands of frames per second.

High-resolution and high-speed cameras need high bandwidth. Then there’s cable length and video protocols: Analog (RS-170), USB, Camera Link (CL), CoaXPress, and GigE. The choice of protocol will may often depend on bandwidth and cable length requirements. Keep in mind the various lens mounts such as M12, CS, C, F, M42, M75 and consider that the larger the image sensor, the larger the lens mount. To determine the minimum image circle required for a linear array sensor, consider the sensor’s length similarly to the area array’s diagonal.

Various other features that may need to be assessed include selectable ROI (region of interest), FPGAs, on-camera memory, embedded image processing, and more. Decide how large the lens will need to be to meet field of view and working distance requirements. Think about the electromagnetic spectrum and figure out exactly how to illuminate the required features of interest and nothing else. Choose between monochrome and color, UV, polarized, NIR, SWIR, multispectral, or hyperspectral. Considering all of these priorities will help narrow down the cameras appropriate for an application.

GPUDirect: Zero-Data-Loss Imaging

In all its high-speed, high-resolution GigE Vision cameras, Emergent ensures best-in-class performance by using an optimized GigE Vision approach and ubiquitous Ethernet infrastructure for reliable and robust data acquisition and transfer instead of relying on proprietary or point-to-point interfaces and image acquisition boards. In addition, Emergent supports direct transfer technologies such as NVIDIA’s GPUDirect, which enables the transfer of images directly to GPU memory, mitigating the impact of large data transfers on the system CPU and memory. Such a setup leverages the more powerful GPU capability for data processing while maintaining compatibility with the GigE Vision standard and interoperability with compliant software and peripherals.

Using Emergent eCapture Pro software, cameras featuring GPUDirect technology can also directly transfer images to GPU memory. By deploying this technology, zero CPU utilization and zero memory bandwidth imaging are achieved without data loss. By using more powerful GPU capabilities, this technology reduces the impact of large data transfers on the CPU and memory of the system while maintaining compatibility with the GigE Vision standard and interoperability with compliant peripherals and software.

Emergent cameras:

Using 10-, 25-, and 100GigE Vision technologies, Emergent has demonstrated top performance in a wide range of markets, including machine vision and entertainment. With an extensive array of camera options, including 10GigE, 25GigE, and 100GigE cameras ranging from 0.5MP to 100MP+, with frame rates as high as 3462fps at full 2.5MP resolution to suit disparate imaging needs.

EMERGENT GIGE VISION CAMERAS FOR MACHINE VISION APPLICATIONS

SWIR, Polarized, and UV Cameras

| Model | Chroma | Resolution | Frame Rate | Interface | Sensor Name | Pixel Size | |

|---|---|---|---|---|---|---|---|

|

HE-5300-S-I | SWIR | 5.24MP | 130fps | 1, 2.5, 5GigE | Sony IMX992 | 3.45×3.45µm |

|

HE-3200-S-I | SWIR | 3.14MP | 170fps | 1, 2.5, 5GigE | Sony IMX993 | 3.45×3.45µm |

|

HE-1300-S-I | SWIR | 1.31MP | 135fps | 1, 2.5, 5GigE | Sony IMX990 | 5×5µm |

|

HE-300-S-I | SWIR | 0.33MP | 260fps | 1, 2.5, 5GigE | Sony IMX991 | 5×5µm |

|

HE-5000-S-PM | Mono Polarized | 5MP | 81.5fps | 1, 2.5, 5GigE | Sony IMX250MZR | 3.45×3.45µm |

|

HE-5000-S-PC | Color Polarized | 5MP | 81.5fps | 1, 2.5, 5GigE | Sony IMX250MYR | 3.45×3.45µm |

|

HR-8000-SB-U | UV | 8.1MP | 145fps | 10GigE SFP+ | Sony IMX487 | 2.74×2.74μm |

Area Scan Cameras

| Model | Chroma | Resolution | Frame Rate | Interface | Sensor Name | Pixel Size | |

|---|---|---|---|---|---|---|---|

|

HE-5000-SBL-M | Mono | 5.1MP | 45.5fps | 1, 2.5, 5GigE | Sony IMX547 | 2.74×2.74μm |

|

HE-5000-SBL-C | Color | 5.1MP | 45.5fps | 1, 2.5, 5GigE | Sony IMX547 | 2.74×2.74μm |

|

HE-8000-SBL-M | Mono | 8.1MP | 36.5fps | 1, 2.5, 5GigE | Sony IMX546 | 2.74×2.74μm |

|

HE-8000-SBL-C | Color | 8.1MP | 36.5fps | 1, 2.5, 5GigE | Sony IMX546 | 2.74×2.74μm |

|

HE-12000-SBL-M | Mono | 12.4MP | 34fps | 1, 2.5, 5GigE | Sony IMX545 | 2.74×2.74μm |

|

HE-12000-SBL-C | Color | 12.4MP | 34fps | 1, 2.5, 5GigE | Sony IMX545 | 2.74×2.74μm |

|

HE-16000-SBL-M | Mono | 16.13MP | 26fps | 1, 2.5, 5GigE | Sony IMX542 | 2.74×2.74μm |

|

HE-16000-SBL-C | Color | 16.13MP | 26fps | 1, 2.5, 5GigE | Sony IMX542 | 2.74×2.74μm |

|

HE-20000-SBL-M | Mono | 20.28MP | 21.5fps | 1, 2.5, 5GigE | Sony IMX541 | 2.74×2.74μm |

|

HE-20000-SBL-C | Color | 20.28MP | 21.5fps | 1, 2.5, 5GigE | Sony IMX541 | 2.74×2.74μm |

|

HE-25000-SBL-M | Mono | 24.47MP | 17.5fps | 1, 2.5, 5GigE | Sony IMX540 | 2.74×2.74μm |

|

HE-25000-SBL-C | Color | 24.47MP | 17.5fps | 1, 2.5, 5GigE | Sony IMX540 | 2.74×2.74μm |

|

HR-5000-SBL-M | Mono | 5.1MP | 99fps | 10GigE SFP+ | Sony IMX547 | 2.74×2.74μm |

|

HR-5000-SBL-C | Color | 5.1MP | 99fps | 10GigE SFP+ | Sony IMX547 | 2.74×2.74μm |

|

HR-8000-SBL-M | Mono | 8.1MP | 73fps | 10GigE SFP+ | Sony IMX546 | 2.74×2.74μm |

|

HR-8000-SBL-C | Color | 8.1MP | 73fps | 10GigE SFP+ | Sony IMX546 | 2.74×2.74μm |

|

HR-12000-SBL-M | Mono | 12.4MP | 68fps | 10GigE SFP+ | Sony IMX545 | 2.74×2.74μm |

|

HR-12000-SBL-C | Color | 12.4MP | 68fps | 10GigE SFP+ | Sony IMX545 | 2.74×2.74μm |

|

HR-16000-SBL-M | Mono | 16.13MP | 52fps | 10GigE SFP+ | Sony IMX542 | 2.74×2.74μm |

|

HR-16000-SBL-C | Color | 16.13MP | 52fps | 10GigE SFP+ | Sony IMX542 | 2.74×2.74μm |

|

HR-20000-SBL-M | Mono | 20.28MP | 43fps | 10GigE SFP+ | Sony IMX541 | 2.74×2.74μm |

|

HR-20000-SBL-C | Color | 20.28MP | 43fps | 10GigE SFP+ | Sony IMX541 | 2.74×2.74μm |

|

HR-25000-SBL-M | Mono | 24.47MP | 35fps | 10GigE SFP+ | Sony IMX540 | 2.74×2.74μm |

|

HR-25000-SBL-C | Color | 24.47MP | 35fps | 10GigE SFP+ | Sony IMX540 | 2.74×2.74μm |

|

HB-5000-SB-M | Mono | 5.1MP | 269fps | 25GigE SFP28 | Sony S IMX537 | 2.74×2.74μm |

|

HB-5000-SB-C | Color | 5.1MP | 269fps | 25GigE SFP28 | Sony S IMX537 | 2.74×2.74μm |

|

HB-8000-SB-M | Mono | 8.1MP | 201fps | 25GigE SFP28 | Sony S IMX536 | 2.74×2.74μm |

|

HB-8000-SB-C | Color | 8.1MP | 201fps | 25GigE SFP28 | Sony S IMX536 | 2.74×2.74μm |

|

HB-12000-SB-M | Mono | 12.4MP | 192fps | 25GigE SFP28 | Sony S IMX535 | 2.74×2.74μm |

|

HB-12000-SB-C | Color | 12.4MP | 192fps | 25GigE SFP28 | Sony S IMX535 | 2.74×2.74μm |

|

HB-16000-SB-M | Mono | 16.13MP | 145fps | 25GigE SFP28 | Sony S IMX532 | 2.74×2.74μm |

|

HB-16000-SB-C | Color | 16.13MP | 145fps | 25GigE SFP28 | Sony S IMX532 | 2.74×2.74μm |

|

HB-20000-SB-M | Mono | 20.28MP | 100fps | 25GigE SFP28 | Sony S IMX531 | 2.74×2.74μm |

|

HB-20000-SB-C | Color | 20.28MP | 100fps | 25GigE SFP28 | Sony S IMX531 | 2.74×2.74μm |

|

HB-25000-SB-M | Mono | 24.47MP | 98fps | 25GigE SFP28 | Sony S IMX530 | 2.74×2.74μm |

|

HB-25000-SB-C | Color | 24.47MP | 98fps | 25GigE SFP28 | Sony S IMX530 | 2.74×2.74μm |

|

HB-127-S-M | Mono | 127.7MP | 17fps | 25GigE SFP28 | Sony IMX661 | 3.45×3.45µm |

|

HB-127-S-C | Color | 127.7MP | 17fps | 25GigE SFP28 | Sony IMX661 | 3.45×3.45µm |

|

HZ-100-G-M | Mono | 103.7MP | 24fps | 100GigE QSFP28 | Gpixel GMAX32103 | 3.2×3.2µm |

|

HZ-100-G-C | Color | 103.7MP | 24fps | 100GigE QSFP28 | Gpixel GMAX32103 | 3.2×3.2µm |

Line Scan Cameras

| Model | Chroma | Resolution | Line Rate | Tri Rate | Interface | Sensor Name | Pixel Size | |

|---|---|---|---|---|---|---|---|---|

|

LR-4KG35-M | Mono | 4Kx2 | 172KHz | 57KHz | 10GigE SFP+ | Gpixel GL3504 | 3.5×3.5µm |

|

LR-4KG35-C | Color | 4Kx2 | 172KHz | 57KHz | 10GigE SFP+ | Gpixel GL3504 | 3.5×3.5µm |

|

TLZ-9KG5-M | Mono | 9K 256 TDI | 608KHz | – | 100GigE QSFP28 | Gpixel GLT5009BSI | 5×5µm |

|

LB-8KG7-M | Mono | 8Kx4 | 300KHz | 100KHz | 25GigE SFP28 | Gpixel GL7008 | 7×7µm |

|

LB-8KG7-C | Color | 8Kx4 | 300KHz | 100KHz | 25GigE SFP28 | Gpixel GL7008 | 7×7µm |

|

LZ-16KG5-M | Mono | 16Kx16 | 400KHz | 133KHz | 100GigE QSFP28 | Gpixel GL5016 | 5×5µm |

|

LZ-16KG5-C | Color | 16Kx16 | 400KHz | 133KHz | 100GigE QSFP28 | Gpixel GL5016 | 5×5µm |

For additional camera options, check out our interactive system designer tool.